- Newly published research by a leading Charles Sturt University AI researcher could find applications in video games, virtual reality training systems, and use by researchers in a range of scientific disciplines

- Accurate computational models of perception of ambiguous figures (optical illusions) have been elusive, until now

- The ability to conduct this form of research is unique and very valuable

A Charles Sturt University researcher has devised a complex piece of coding that will represent some behaviours of the brain to determine why and how people see optical illusions.

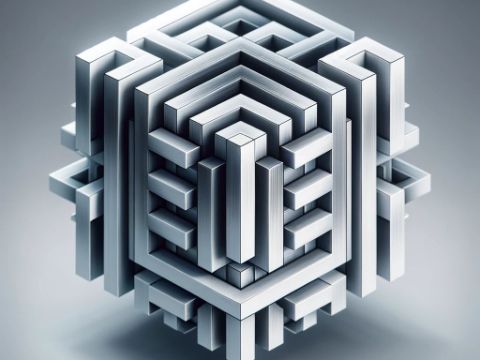

Principal Research Fellow, Physics and Machine Learning Lead in the Charles Sturt Artificial Intelligence and Cyber Futures Centre (AICFI) Dr Ivan Maksymov (pictured, inset) recently published a journal article describing how he designed and trained a deep neural network model to simulate human perception of the Necker cube, an ambiguous drawing with several alternating possible interpretations.

The paper, ‘Quantum-Inspired Neural Network Model of Optical Illusions’ was published in the online open access MDPI journal Algorithms (volume 17, issue 1, January 2024).

Dr Maksymov has previously worked on optical illusions and more recently worked for some time in quantum modelling.

Although Dr Maksymov’s research is ‘blue sky’, that is, it has been driven by curiosity, its outcomes may be applied in psychology, psychiatry and vision science.

“It can also be used to train pilots, astronauts and operators of drones,” Dr Maksymov said.

“Optical illusions can significantly affect a pilot’s ability to fly safely, and every pilot should be prepared to handle them.”

Although Dr Maksymov’s project is a work in progress, the simplicity of the algorithm he developed promises to enable flight training centres such as the Australian Airline Pilot Academy (AAPA) at Wagga Wagga to have software that will help investigate how pilots perceive and handle optical illusions.

“This paper examines Quantum-Inspired Neural Network Models, essentially through complex coding that will represent some behaviours of the brain, to provide a theoretical simulation of how the brain might perceive the world and make decisions; in this case, it is how humans might perceive optical illusions,” Dr Maksymov said.

“This same Neural Network Model could be advantageous for psychologists, behavioural scientists, vision scientists, machine learning experts, developers of virtual reality systems and video games, as well as by trainers of astronauts.

“It would be great if my model could be used to train pilots in regional NSW.”

The research paper explains that ambiguous optical illusions have been a paradigmatic object of fascination, research and inspiration in arts, psychology and video games.

However, accurate computational models of perception of ambiguous figures have been elusive.

Dr Maksymov designed and trained a deep neural network model to simulate human perception of the Necker cube (an example, left), an ambiguous drawing with several alternating possible interpretations.

Dr Maksymov designed and trained a deep neural network model to simulate human perception of the Necker cube (an example, left), an ambiguous drawing with several alternating possible interpretations.

Defining the weights of the neural network connection using a quantum generator of truly random numbers, in agreement with the emerging concepts of quantum artificial intelligence and quantum cognition, the research reveals that the actual perceptual state of the Necker cube is a qubit-like superposition of the two fundamental perceptual states predicted by classical theories.

“The results are also useful for researchers working in the fields of machine learning and vision, psychology of perception and quantum–mechanical models of human mind and decision making,” Dr Maksymov said.

Dr Maksymov cautioned that he does not claim that quantum processes may take place in the human brain. However, he argues that a quantum-like mathematical description of mental states may help better understand how humans perceive the world and make decisions.

“Collecting data and studying the brain is complex and takes time,” he said. “For example, I completed this paper over two weeks; for a behavioural scientist to do this might take years.

“The ability to conduct this form of research is presently unique and, very valuable.”

Social

Explore the world of social